Hattie's Claim in Visible Learning

Was that complex educational influences could be isolated, then measured & compared in terms of Student Achievement via a simple statistic the effect size (d) to determine "what works best" - details here.

Summary of Issues with Hattie's Representation of Student Achievement

1. Student Achievement is only one aspect of a wider set of purposes of schooling. Hattie admits this,

"Of course, there are many outcomes of schooling, such as attitudes, physical outcomes, belongingness, respect, citizenship, and the love of learning. This book focuses on student achievement, and that is a limitation of this review" (VL, p. 6).

2. Student achievement can be measured in many different ways & the effect size derived, can change significantly solely because of the test used. As such, many academics argue it is not possible to directly compare effect sizes, from different studies, to determine 'what works best', e.g., DuPaul & Eckert (2012),

"It is difficult to compare effect size estimates across research design types. Not only are effect size estimates calculated differently for each research design, but there appear to be differences in the types of outcome measures used across designs" (p. 408).

3. Hattie later in Visible Learning, confounds what Achievement is and how it can be measured by promoting Bereiter’s model of learning. Hattie stated,

"There needs to be a major shift, therefore, from an over-reliance on surface information (the first world) and a misplaced assumption that the goal of education is deep understanding or development of thinking skills (the second world), towards a balance of surface and deep learning leading to students more successfully constructing defensible theories of knowing and reality (the third world)...

the focus of this book is on achievement outcomes. Now this notion has been expanded to achievement outcomes across the three worlds of understanding" (VL, p. 28-29).

4. The difference in what achievement is & how it can be measured lead to serious Validity & Reliability issues of Visible Learning.

Is There Agreement as to the Purpose of Schooling?

Examples of Other Student Outcomes

Hattie regularly cites the New Zealand Government's study by Alton-Lee (2003) which states,

"Quality teaching cannot be defined without reference to outcomes for students." (p. 8)Hattie agrees, in his 2005 ACER lecture, he states,

"The latest craze, begun by the OECD is to include key competencies or ‘essence’ statements and this seems, at long last, to get closer to the core of what students need. Key competencies include thinking, making meaning, managing self, relation to others, and participating and contributing. Indeed, such powerful discussions must ensue around the nature of what are ‘student outcomes’ as this should inform what kinds of data need to be collected to thence enhance teaching and learning" (p. 14).Alton-Lee (2003), continued to outline the outcomes in her jurisdiction,

"The term 'achievement' encompasses achievement in the essential learning areas, the essential skills, including social and co-operative skills, the commonly held values including the development of respect for others, tolerance (rangimärie), non-racist behaviour, fairness, caring or compassion (aroha), diligence and hospitality or generosity (manaakitanga). Educational outcomes include attitudes to learning, and behaviours and other outcomes demonstrating the shared \ values. Educational outcomes include cultural identity, well-being, whanau spirit and preparation for democratic and global citizenship... Along with ... the outcome goals for Mäori students:

Goal 1: to live as Mäori.

Goal 2: to actively participate as citizens of the world.

Goal 3: to enjoy good health and a high standard of living." (p. 7)In Australia, I particularly like Prof Jane Wilkinson (2021) quoting the Wurundjeri Elders from southern New South Wales -

"Learning to live well, in a World worth living in."The Director-General of the high achieving Finnish system, Pasi Sahlberg, Finnish Lessons 2.0, outlines Finnish values (p. 101),

"one purpose of formal schooling is to transfer cultural heritage, values, and aspirations from one generation to another. Teachers are, according to their own opinions, essential players in building the Finnish welfare society. As in countries around the world, teachers in Finland have served as critical transmitters of culture..."Then in the Australian Teacher Magazine March 2019, Sahlberg says,

"...policy makers the world over have become incarcerated by their own narrow assessment of what true student 'growth' entails...

If you look at the Gonski 2.0, it's all about measuring growth... for most people 'growth' means how you progress academically, and how you are measuring that academic growth.

It's not about health or wellbeing, or developing children's identity or social skills or other areas... politicians and bureaucrats are kind of imprisoned by this...

They don't realise that Singapore, for example, really envies Finland because of this play [approach], ... because they realise that what they do is unsustainable." (p. 13)

John Marsden, a popular novelist and English teacher, started an alternative school, Candlebark, which has one of the most well-thought out purposes of Schooling that I've seen - here.

"Education is about cultural enrichment. It is about knowing the world you inhabit. It’s about political engagement and performing your civic role."

The most popular TED talk by Ken Robinson - "do schools kill creativity", emphasises that creativity should be an important outcome of education.

The Foundation for Young Australians in partnership with business and government organisations promotes the following outcomes-

Burstein writes a relevant article on this issue- Educators Don’t Agree on What Whole Child Education Means. Here’s Why It Matters.

Focus on the Purpose of Education

Professor Alan Reid in his powerful book, Changing Australian Education: How policy is taking us backwards and what can be done about it, emphasises the need to re-visit and define the purpose of education before any discussion of improvement or change can occur.

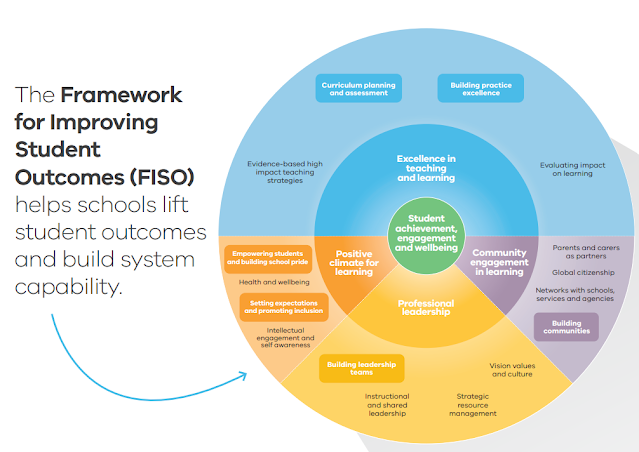

In my state, Victoria, with it's focus on Achievement and High Impact Strategies (based on Hattie), there is very little focus on our other 2 aims of schooling - Engagement and Wellbeing.

Student Achievement is Measured in Many Different Ways

A major issue with comparing different studies is that student achievement is measured in many different ways, e.g., tests, standardised tests, projects, assignments, essays, observations, self assessment, peer assessment, etc.

Many peer reviews question Hattie's method of comparing effect sizes of student achievement from different studies which use different forms of assessment, e.g., DuPaul & Eckert (2012), Hooley (2013), Bergeron & Rivard (2017), Simpson (2019), Kraft (2020), Nielsen & Klitmøller (2021).

Dwayne Sacker summarising Noel Wilson's seminal 1997 dissertation “Educational Standards and the Problem of Error”, where Wilson delves into the nature of assessment,

"Now since there is no agreement on a standard unit of learning, there is no exemplar of that standard unit and there is no measuring device calibrated against the said non-existent standard unit, how is it possible to “measure the non-observable”?

THE TESTS MEASURE NOTHING for how is it possible to “measure” the non-observable with a non-existing measuring device that is not calibrated against a non-existing standard unit of learning??

Pure logical insanity!"

Worse - Surrogates are used for Student Achievement

The focus of VL is student achievement and in Hattie's 2005 ACER slide presentation, he warned NOT to use standardised tests or surrogates for ability measures.

Wecker et al. (2017) confirm this saying, Hattie mistakenly included studies that do not measure academic performance (p. 28).

Blatchford (2016b) also raises this issue about Hattie,

"it is odd that so much weight is attached to studies that don't directly address the topic on which the conclusions are made" (p. 13).

Hattie & Clinton (2008) re-emphasise the issues of standardised testing.

"The standardised tests that are usually administered often do not reflect the classroom curriculum and learning objectives of the teachers at a particular time." (p. 320)

However, once again Hattie contradicts this & his 2005 ACER proclamation and does include many studies which use standardised tests, which often do not test the specific material teachers are teaching and students are learning.

Greene also writes about this problem - Stop Talking About Student Achievement.

Effect Sizes of Standardised versus Specific Tests

Hattie's key statistic is the Effect Size, which he claimed was based on Student Achievement - details here.

However, a number of researchers detail that standardised tests yield significantly lower effect sizes than specific tests for the same intervention (Slavin (2019), Simpson (2017) & Bergeron & Rivard (2017)).

Dylan Wiliam also details this problem,

"Effect sizes depend on how sensitive the assessments used to measure student progress are the things that teachers are changing. In one study ... the effects of feedback in STEM subjects was measured with tests that measured what the students had actually been learning, the effect sizes were five times greater than when achievement was measured with standardised tests.

Which of these is the 'correct' figure is obviously a matter of debate. The important point is that assessments differ in their sensitivity to the effects of teaching, which therefore affects effect sizes."Wiliam (2016) displays the findings of a study by Ruiz-Primo et al. (2002) showing the differences in effect size versus the type of test used to measure it.

Ruiz-Primo et al. (2002) use the following categories:

Wiliam uses this research to show the effect size can range from 0.20 to 1.20 depending on the test measured. Wiliam's interview by Ashman goes into more detail here -51 minute onward.

"Different ways of measuring the same outcome construct – for example, achievement – may result in different effect size estimates even when the interventions and samples are similar." (p. 10)Poulsen (2014) concurs,

"Only if the learning outcomes are measured with digitized multiple-choice test of exactly the same version with the same built-in calculation models, the data can be directly compared" (p. 4, translated from Danish).

Kraft (2021) in his discussion with Hattie,

"We both agree that the magnitude of an effect size depends a lot on what outcome you measure. Studies can find large effect sizes when they focus on more narrow or proximal outcomes that are directly aligned with the intervention and collected soon after. It is much easier to produce large improvements in teachers' self-efficacy than in the achievement of their students. In my view, this renders universal effect size benchmarks impractical."

Wolf & Harbatkin (2022) reviewed 373 studies with 1553 effect sizes. The measures used in each study were then classified in four categories: independent broad, when the measure was not created by the same researchers/developers who conducted the study or designed the program and was intended to evaluate student achievement in a subject; independent narrow, similar to the previous one but included measures intended to evaluate specific elements of a subject area; non-independent developer, when the measure was created by the developer of the program under evaluation; and non-independent researcher, when the measure was created by the authors of the study.

The results showed a large difference between effect sizes of independent and non-independent measures. Broad measures had a mean effect size of +0.10, narrow measures of +0.17, researcher measures of +0.38, and developer measures of +0.41.

Jack Buckley, The Commissioner - U.S. Dep of Education’s National Center for Education Statistics on International Standardised tests,

'... an RCT was designed involving over 500 pupils across over 40 schools. The programme involved an intensive one-to one mathematics intervention intended for children in Key Stage 1 (ages 6–7) performing at the lowest 5% level nationally...

Part of the analysis involved two different tests. The primary test was the Progress in Mathematics 6 (PIM) test conducted in January 2010. The secondary test was the Sandwell Early Numeracy Test – Revised Test B (SENT-R-B) conducted in December 2009.

The effect size... for the PIM test was 0.33 and for the SENT-R-B test was 1.11.'

"People like to take international results... and focus on high performers and pick out areas of policy that support the policies that they support... I never expect tests like these to tell us what works in education. That’s like taking a thermometer to explain why it’s cold outside."

Different Standardised Tests also Yield Different Effect Sizes

Simpson (2018b, p. 4-5), even shows problems of comparing effect sizes with 2 different standardised tests,'... an RCT was designed involving over 500 pupils across over 40 schools. The programme involved an intensive one-to one mathematics intervention intended for children in Key Stage 1 (ages 6–7) performing at the lowest 5% level nationally...

Part of the analysis involved two different tests. The primary test was the Progress in Mathematics 6 (PIM) test conducted in January 2010. The secondary test was the Sandwell Early Numeracy Test – Revised Test B (SENT-R-B) conducted in December 2009.

The effect size... for the PIM test was 0.33 and for the SENT-R-B test was 1.11.'

However, Gijbels et al. were actually looking at the different effect sizes derived from different tests. They proposed that since PBL is developing problem solving skills, tests which measure these skills will show higher effect sizes than a test on facts. (p. 32)

They report 3 totally different effect sizes from different tests (p. 43):

They conclude,

"...students studying in PBL classes demonstrated better understanding of the principles that link concepts (weighted average ES = 0.795) than did students who were exposed to conventional instruction... students in PBL were better at the third level of the knowledge structure (weighted average ES = 0.339) than were students in conventional classes. It is important to note that the weighted average ES of 0.795, belonging to the second level of the knowledge structure, was the only statistically significant result." (p. 44).

Yet, Hattie only reports one effect size of 0.32 from this study. It is difficult to find how Hattie got this result, I'm guessing he averaged all the effect sizes reported in the study.

The Shape of Assessment

David Didau, in his insightful blog, The Shape of Assessment, analyses achievement tests from another angle but is congruent with Ruiz-Primo et al. (2002) above.

Didau states,

"On the whole, the purpose of assessment data appears to be discriminating between students."

Didau argues that,

"the purpose of a test attempting to assess how much of the curriculum has been learned should not be interested in discriminating between students."

Didau then gives 2 illustrative graphs comparing this Discriminatory Assessment with what he calls Mastery Assessment.

Hacke (2010) also illustrates the inconsistency of using the different type of tests: criterion-referenced tests are intended to measure how well a person has learned a specific body of knowledge and skills, whereas norm-referenced tests are developed to compare students with each other and are designed to produce a variance in scores. She cites a unique study by Harris and Sass (2007, p. 109), who examined the influence of teacher certification (NBC) using two different types of assessment data from the state of Florida, which gives both norm-referenced and criterion-referenced tests. Harris and Sass compared the results which revealed that the effect of NBC was negative for both reading and mathematics using the norm-referenced test, whereas for the criterion-referenced assessments they were positive.

Hattie compares these 2 different forms of assessments as if they are the same, once again a Validity problem.

Tests of Different Lengths Also Give Different Effect Sizes

The Impact of Test Length on Effect Size

Peer Reviews of Hattie Comparing Different Forms of Achievement

Rømer (2016) in Criticism of Hattie's theory about Visible Learning,'The relevant methodological question is... How does Hattie define "achievement outcome/learning outcomes/learning", which is his central effect concept...

The theoretical uncertainties are amplified in the empirical analyses, which are deeply colored by the lack of comprehension. No clue as far as I can see how "achievement" is operationalized, whether it is surface learning, deep learning or construction learning or, for that matter, something completely fourth that is measured?' (p. 5, translated from Danish).

Larsen (2014) in Know thy impact – blind spots in John Hattie’s evidence credo.

"The first among several flaws in Hattie's book: what is an effect?

John Hattie never explains what the substance of an effect is. What is an effect’s ontology, its way of being in the world? Does it consist of something as simple as a correct answer on a multiple-choice task, the absence of arithmetic and spelling errors? And may all the power of teaching and learning processes (including abstract and imaginative thinking, history of ideas and concepts, historical knowledge, dedicated experiments, hands-on insights, sudden lucidity, social and language criticism, profound existential discussions, social bonding, and personal, social, and cultural challenges) all translate into an effect score without loss? Such basic and highly important philosophical and methodological questions do not seem to concern the evidence preaching practitioner and missionary Hattie" (p. 3).

"We also get no information about how "learning outcomes" are defined or measured in the studies at different levels, what tests are used, which subjects are tested and how."

Hooley (2013) casts doubt on the validity of Hattie's research,

"Have problems of validity been discussed concerning original testing procedures on which meta-analysis is based? ... It has been assumed that test procedures are accurate and measure what they are said to measure. This is a major assumption ... As with all research the quality of the findings depend on the quality of the research design - including the instruments (measures that assess students) and the sampling design; (criteria for selection, size and representativeness of the sample, etc)" (p. 43).

Conclusion

Depending on the test used to measure an intervention the effect size will be significantly different. This casts significant doubt on Hattie's prime claim that it is possible to determine 'what works best' by way of effect size.

But worse, many of the studies Hattie used measured something else, a surrogate, e.g., hyperactivity, behaviour, attention, concentration, engagement, and IQ.

This has caused many peer reviewers to doubt Hattie's claims, e.g.

Bergeron & Rivard (2017) - "pseudo-science" &

Dylan Wiliam - "nonsense"!

Another Elephant walks into the Achievement Room

Hattie promotes Bereiter’s model of learning,

Proulx (2017) also identifies this inherent problem,

"Knowledge building includes thinking of alternatives, thinking of criticisms, proposing experimental tests, deriving one object from another, proposing a problem, proposing a solution, and criticising the solution..." (VL p. 27).

"There needs to be a major shift, therefore, from an over-reliance on surface information (the first world) and a misplaced assumption that the goal of education is deep understanding or development of thinking skills (the second world), towards a balance of surface and deep learning leading to students more successfully constructing defensible theories of knowing and reality (the third world)" (p. 28).

"the focus of this book is on achievement outcomes. Now this notion has been expanded to achievement outcomes across the three worlds of understanding" (p. 29).Note- I have not found a study in Hattie's synthesis that measures outcomes in the so-called 'second world', let alone the 'third world'!

Proulx (2017) also identifies this inherent problem,

"ironically, Hattie self-criticizes implicitly if we rely on his book beginning affirmations, then that it affirms the importance of the three types learning in education."He quotes Hattie from VL,

"But the task of teaching and learning best comes together when we attend to all three levels: ideas, thinking, and constructing." (VL, p. 26)

"It is critical to note that the claim is not that surface knowledge is necessarily bad and that deep knowledge is essentially good. Instead, the claim is that it is important to have the right balance: you need surface to have deep; and you need to have surface and deep knowledge and understanding in a context or set of domain knowledge. The process of learning is a journey from ideas to understanding to constructing and onwards." (VL, p. 29)From this quote, Prof Proulx goes on to say,

"So with this comment, Hattie discredits his own work on which it bases itself to decide on what represents the good ways to teach. Indeed, since the studies he has synthesized to draw his conclusions are not going in the sense of what he himself says represent a good teaching, how can he rely on it to draw conclusions about the teaching itself?"

One of the earliest critiques, Snook et al. (2009) also raise this issue,

"He also has to concede that the form of 'learning' which he discusses is, itself, severely limited: Having distinguished three levels of learning (surface, deep, and conceptual), he says in one of his conclusions: 'A limitation of many of the results in this book is that they are more related to the surface and deep knowing and less to conceptual understanding' (p. 249). And yet, conceptual knowing or understanding is what he thinks should be the result of good teaching. Clearly there is less to be drawn from his synthesis than commentators have suggested. Much depends on the kind of learning that is desired in formal education." (p. 4)

Nielsen & Klitmøller (2017) also discuss Hattie's promotion of Bereiter’s model of learning and the inconsistency with the way achievement is measured (p. 13).

Contradictions with Hattie's Earlier Work on Achievement

"An obvious and simple method would be to investigate the effects of passing and failing NBPTS teachers on student test scores. Despite the simplicity of this notion, we do not support this approach.

... student test scores depend on multiple factors, many of which are out of the control of the teacher.

... if test scores are to be used in teacher effectiveness studies, it is important to recognise that:

a. student achievement must be related to a particular teacher instruction, as students can achieve as a consequence of many other forms of instruction (e.g., via homework, television, reading books at home, interacting with parents and peers, lessons from other teachers);

b. a teacher can impart much information that may be unrelated to the curriculum;

c. students are likely to differ in their initial levels of knowledge and skills, and the prescribed curricula may be better matched to the needs of some than others;

d. differential achievement may be related to varying levels of out-of-school support for learning;

e. teachers can be provided with different levels and types of instructional support, teaching loads, quality of materials, school climates, and peer cultures;

f. instructional support from other teachers can take a variety of forms (particularly in middle and high schools where students constantly move between teachers); and

g. teaching artefacts, such as the match of test content to prescribed curricula, levels of student motivation, and test-wiseness can distort the effects of teacher effects.

Such a list is daunting and makes the task of relating teaching effectiveness to student outcomes most difficult. The present study does not, therefore, use student test scores to assess the impact of the NBCTs on student learning."In 2000 Hattie was part of a team of 4 academics to run a validity study for the US National Board Certification System, he rejected the use of student test scores as a measure of teacher performance, claiming,

"It is not too much of an exaggeration to state that such measures have been cited as a cause of all of the nation’s considerable problems in educating our youth... It is in their uses as measures of individual teacher effectiveness and quality that such measures are particularly inappropriate."In a remarkable turnaround from his earlier work, Visible Learning and his software 'asTTle' does use the simplistic approach of student tests and ignores his own principles (as outlined above), thus disregarding the impact of other significant effects on student achievement.

An Example of the difficulties of assessing and ranking

Teacher Performance Pay

Hattie has been a strong advocate of teacher performance pay and has promoted his software 'asTle' for this purpose. Based on the discussion above, 'asTTle' has a very narrow measure of student achievement which is concerning to many academics, for example,

Professor Ewald Terhardt (2011, p. 434),

"A part of the criticism on Hattie condemns his close links to the New Zealand Government and is suspicious of his own economic interests in the spread of his assessment and training programme (asTTle).

Similarly, he is accused of advertising elements of performance-related pay of teachers and he is being criticised for the use of asTTle as the administrative tool for scaling teacher performance. His neglect of social backgrounds, inequality, racism, etc., and issues of school structure is also held against him...

However, there is also criticism concerning Hattie’s conception of teaching and teachers. Hattie is accused of propagating a teacher-centred, highly directive form of classroom teaching, which is characterised essentially by constant performance assessments directed to the students and to teachers."We are a long way from agreeing on student outcomes and consistently defining what achievement is let alone measuring it!

Breakspear (2014, p. 11) warns,

"Narrow indicators should not be equated with the end-goals of education."In his excellent paper 'School Leadership and the cult of the guru: the neo-Taylorism of Hattie', Professor Scott Eacott also warns (p. 9),

"Hattie’s work has provided school administrators with a science of teaching. The teaching and learning process is no longer hidden in the minds of learners, but made visible. This sensory experience can be used as the data for the generation of data – therefore making it measurable – and evidence informed decisions on what to do. The data is an extension of educational administration, in the era of data, if there is no evidence of learning then it did not happen. Furthermore, if there is no evidence of learning, then teaching did not happen and this is a performance issue to be managed by administrators."

Other Commentary on Achievement

Eric Kalenze in his ResearchEd talk uses a powerful analogy of Schools using a swimming pool to train students for a Marathon.

No comments:

Post a Comment